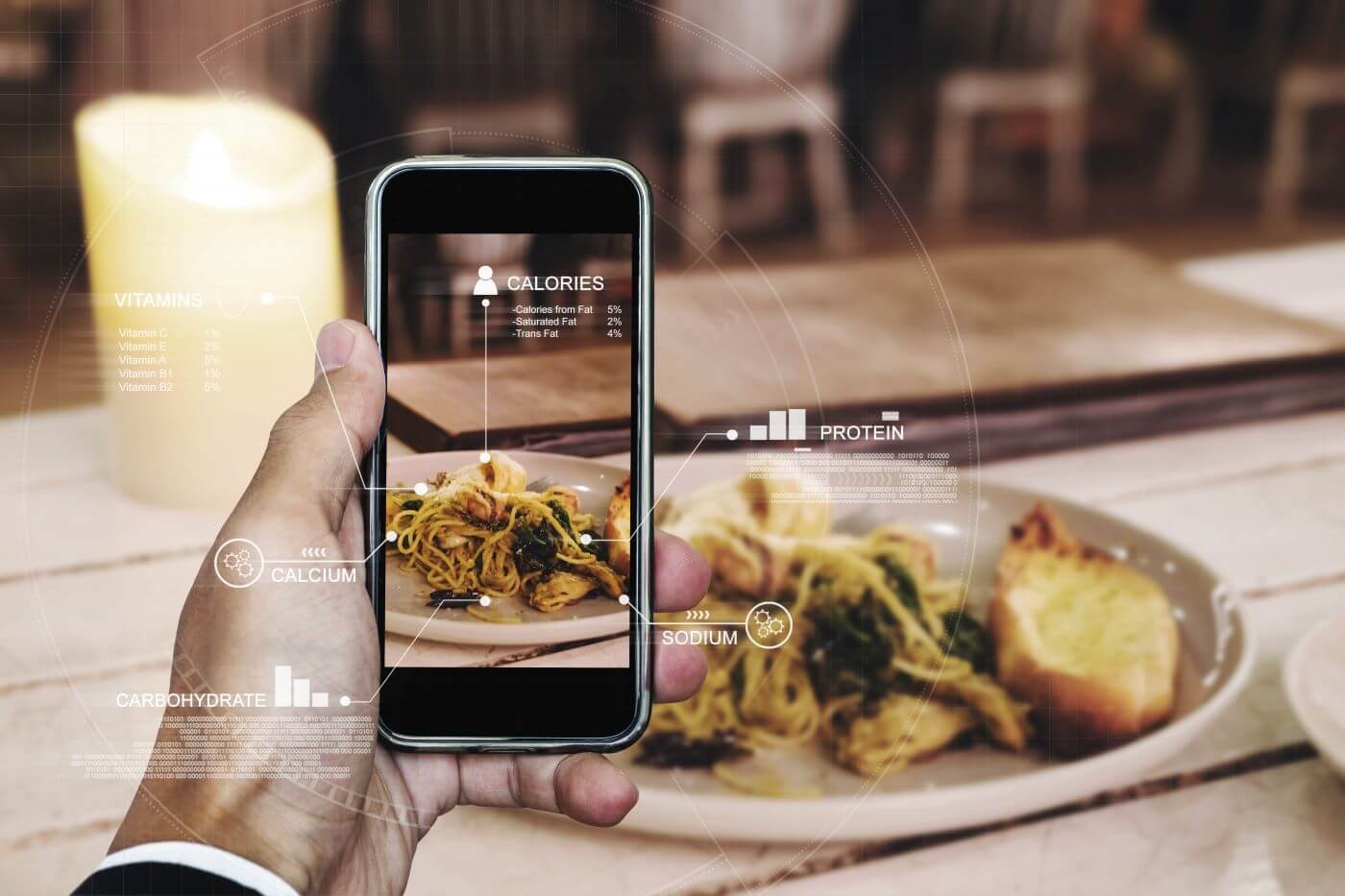

“Lens that looks after your nutrition.”

Preposition for AI-powered AR glasses that analyze physiological health data, recognize food, scan surroundings, and suggest personalized healthy meals in real time.

Theme:Data Mirrors

Research, conceptualise and design a product, digital ecosystem or interactive material intervention that responds to the theme Data Mirrors. Project can span the diametrically opposed extremes of a commercially focused product to that of a speculative social intervention.

UX UI Designer

3 months

Research and Analysis

To understand how users make food decisions and how physiological signals (like hydration or blood pressure) can inform real-time, health-conscious eating behavior.

How users currently track health and food?

Where the gaps are in real-time guidance?

How effective their UX/UI solutions are?

Too Many Touchpoints

Users are required to log every meal, drink manually.

Food tracking apps often depend on barcode scanning or search menus.

Switching between devices (watch, phone, app) to check vitals or track food breaks flow.

Requires visual focus, typing, and confirmation—especially inconvenient while eating or cooking.

High friction leads to low user engagement over time.

No Environmental Awareness

Apps can’t detect where the user is or what food is physically present.

They don't know if you're in a kitchen, store, or near a food source.

Context-free advice feels generic and often irrelevant.

Lack of Real-Time Suggestions

Most apps only provide retrospective analysis (after meals or at day’s end).

Health devices show vitals but rarely link them to actionable food advice.

Users don’t get help when they need it during food decisions.

Users want automation, not input.

Systems must work in the background, detecting and suggesting without manual effort.

Switching apps or typing disrupts flow—wearables should remove these points of friction.

AR interface ➝ frictionless, ambient guidance

Context is everything.

Food guidance must be aware of location, timing, and surroundings to be meaningful.

Object/environment recognition ➝ contextual nudges

Data should lead to decisions.

Raw stats (like 120/80 BP) mean little to most users unless paired with smart suggestions.

Real-time biometric data ➝ smarter food suggestions

Define and Ideate

This HUD overlays real-time, personalized visual feedback onto the user’s field of view without requiring manual input or disrupting their environment.

Physiological signals: Vital signs like Heart Rate(color-coded), Blood Pressure, Hydration Status, Glucose Level.

Food recognition: Displays nutritional value and records passively

Surrounding context: Scan surroundings (e.g., kitchen, bakery) to offer timely suggestions

Activity tracking: Tracks user activity and prompts them to go for healthier food options

Design